In an in-depth report published last week, the California Government Operations Agency (CalGovOps) offers an insightful assessment of potential benefits and risks of Generative AI, and also explores a number of practical uses in State government. The collaborative report is the first product of Governor Newsom’s Executive Order on Generative Artificial Intelligence, issued in September.

Axyom, a long-time partner to State and Local agencies, is following the progress of this ongoing work closely and today, we are highlighting some of the key take-aways of the report.

Home to 35 of the world’s top 50 AI companies, California leads the world in GenAI innovation and research, and the State is positioning itself as forward-thinking champions of its ethical and transparent use. It recognizes that GenAI has the potential to dramatically improve service delivery outcomes and increase access to and utilization of government programs. It also weighs the potential risks inherent to the use of the technology, and lays out an initial framework to mitigate threats.

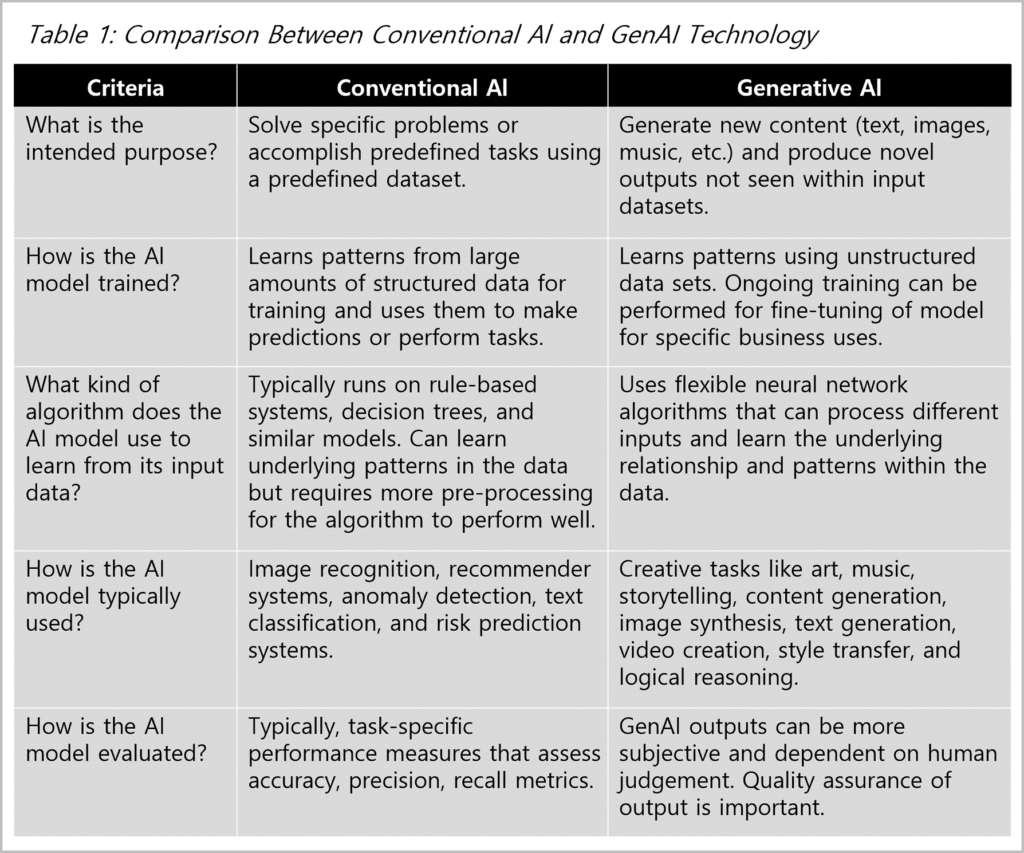

The report notes that the focus of the work being done now is focused primarily on GenAI, as opposed to conventional AI, which is usually designed for specific tasks and often limited by the scope of the inputted training data as well as the technical expertise of the programmer. They offer a helpful comparison between GenAI and AI, as illustrated in Table 1.

GenAI’s capacity to learn makes it easier for the State to design services and products to be responsive to Californians’ diverse needs, across geography and demography. GenAI solutions can recommend ways to display complex information in a way that resonates best with various audiences or highlight information from multiple sources that is relevant to an individual person. These functions can further California’s goals as they allow for optimized government experiences allowing Californians greater access to state information and services, and by advancing equity, inclusion, and accessibility in outcomes. Example Use Cases include:

-

- Apply GenAI on government service data to identify specific groups or subsets of participants that may benefit from additional outreach, support services, and resources based on their circumstances and needs (for example, local job training for people claiming EITC).

- GenAI can identify groups that, for language or other reasons, are disproportionately not accessing services by analyzing feedback surveys or comments for language that indicate accessibility difficulties. This can help determine opportunities to improve access.

▮ Optimize workloads for environmental sustainability

Incorporating GenAI in government can drive environmental sustainability by optimizing resource allocation, maximizing energy efficiency and demand flexibility, and promoting eco-friendly policies. For instance, this technology can enhance operational efficiency, decrease paper usage and waste, and support environmentally conscious governance. Example Use Cases include:

-

- GenAI could analyze traffic patterns, ride requests, and vehicle telemetry data to optimize routing and scheduling for state-managed transportation fleets like buses, waste collection trucks, or maintenance vehicles. By minimizing mileage and unnecessary trips, GenAI could reduce associated fuel use, emissions, and costs.

- GenAI simulation tools could model the carbon footprint, water usage, and other environmental impacts of major infrastructure projects. By running millions of scenarios, GenAI can identify potentially the most sustainable options for planning agencies and permit reviewers.

The report recommends that government leaders prioritize GenAI proposals offering the highest potential benefits, along with the appropriate risk mitigations, over those where benefits are not significant compared to existing work processes. Research conducted within state government, informed by feedback from subject matter experts and community groups, has developed an emerging picture of the specific risk factors of GenAI compared to those posed by conventional AI. In our next post, we’ll examine the State’s initial approach to identifying and mitigating these risks.